Maybe AI Chat buddies aren’t much of a “buddy” after all.

Alphabet, Google’s parent company, warned its employees not to input any confidential information to Google’s own AI chatbot, Bard, or any other AI chatbot for that matter. Why? It’s because AI companies store everything we submit in AI chatbots.

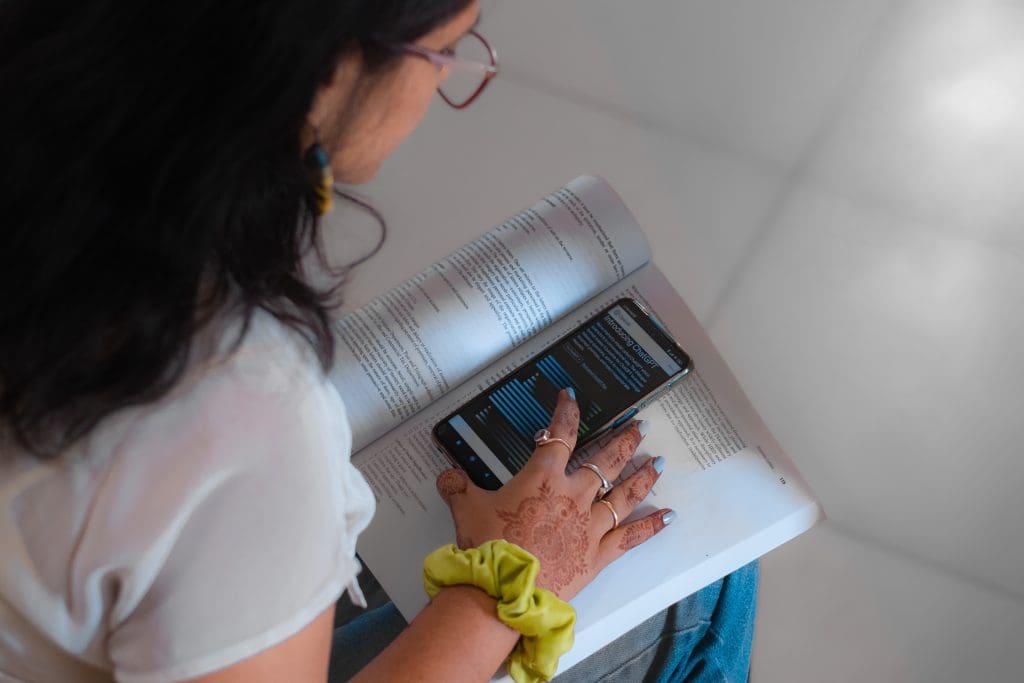

There are human employees who can access the messages we send to AI chatbots. If you have tried using AI chatbots like ChatGPT, you might see message prompts saying, “may use the data you provide us to improve our models.” this is because there are studies that show how AI uses the data given by the users.

Not only does Google warn its employees, but it also warns users. They have now updated Bard’s privacy notice page with the following prompt: “Please do not include information that can be used to identify you or others in your Bard conversations.”

This serves as a reminder that we should all be responsible for using AI chatbots and other AI-powered applications. Submitting private information could be harmful to users. Now that Google and OpenAI themselves have warned users, you should probably listen to them.